Exploring Methods for Bulk File Transfers from SharePoint — Reactive and Batch Job Approaches

Exploring Methods for Bulk File Transfers from SharePoint — Reactive and Batch Job Approaches

In this post, we’re going to delve into the process of transferring files en masse from SharePoint to Azure Blob Storage, and subsequently loading these files into our application. This can be achieved through either a reactive method or by utilizing a batch job.

Setting:

We’ve engineered a system that’s designed for document handling and carrying out semantic explorations. Users are at liberty to upload files regardless of their size and patiently await the analysis performed by our machine learning workflows. This produces important knowledge regarding the document’s content, helping users to swiftly grasp the insights of the document. Moreover, users have the ability to execute semantic searches across a pre-loaded document database within the application.

Scenario:

It was absolutely essential for us to incorporate a feature that allows mass uploading, where we could swiftly transfer documents directly from the client’s email inbox. This would eliminate the trouble of having to download the files and manually input them into the application.

Our customer established an automated system that enables all files contained in emails, identified by a specific pattern in the email header or body, to be directed to a SharePoint folder.

It was essential for us to establish a conduit for transferring files from the client’s SharePoint to our Azure cloud storage. After this, the file metadata would be loaded into a database table. Following this process, we could utilize our current pipelines to import the data into the application.

Journey of Discovery:

Proactive Strategy:

Our adventure involved discovering methods to proactively select files from the shared digital space, meaning that any time a fresh document is uploaded or an existing one is modified, the revised document would find its way to our cloud storage.

In order to achieve our goal, we have investigated the following solutions:

We’ll delve into the procedures and obstacles encountered during each of these investigative journeys.

File Transfer via Power Automate

Power Automate is a user-friendly platform based in the cloud that requires minimal to no coding. It enables users to build automated workflows across various services, eliminating the need for significant code development.

Tasks we desired the power-automate to perform:

Initiating a Kafka message is vital for our operations as it signals when we should launch our data load pipeline. This pipeline retrieves files from the blob store for our application.

The initial three steps were pretty uncomplicated as Power Automate provides ready-made tasks to establish connections with SharePoint and Azure, given that the correct credentials are supplied.

In order to initiate a Kafka message following a successful file transfer, we found that there were no pre-existing tasks to accomplish this. So, we had to create our own customized Azure function to establish a connection with KAFKA and set off a message. Given that the majority of Kafka connections utilize certificates, it became necessary for us to store these certificates in a blob. Subsequently, we composed a tailor-made Azure function to initiate a message.

Azure Functions is a serverless computing service that enables any user to compose and run code without worrying about infrastructure setup.

Keep in mind, to establish Azure functions within an Azure account, permissions from the account holders are necessary.

Challenges we encountered in this configuration and the reasons why we chose not to follow this path:

Given the significance of the latter aspect to us, we opted not to follow this method. The process of obtaining permissions for Power Automate flow and Azure functions proved to be lengthy due to the numerous stages of security verification required, particularly as the SharePoint is client-facing.

Kicking Off File Transfers through SharePoint Web Hooks

Before we dive in, our first step will be to carry out Azure App registration. This nifty process lets us set up authentication and authorization for a range of workflows or activities inside the Azure cloud.

In our scenario, we’re interfacing with SharePoint through an application context, or what’s often referred to as app-only. At the moment, our organization’s favored method for implementing app-only in SharePoint is via a SharePoint App only principal. This strategy involves attempting to grant our Azure app, which we’ve already registered, authorization through SharePoint. The process we use to accomplish this is outlined below:

The first step involves generating an Azure Application within the ‘App Registrations’ section and producing a client-id and client-secret.

**IMPORTANT: Make sure to securely store the client-id and client-secret (You’ll only be able to copy this information at the time of creation)

Step 2: Grant the required authorizations to the application in the API (Application Programming Interfaces) Permissions section. Determine whether you need application-level or delegated permissions for the Azure app.

Phase 3: Within the shared location, navigate to the following web address and input the Client-ID corresponding to the app you’ve previously registered.

https://<site-domain-of-sharepoint>/sites/<tenant-name-of-sharepoint>/_layouts/15/appinv.aspx

**PLEASE REMEMBER: Swap <site-domain-of-sharepoint> with your specific SharePoint site domain and <tenant-name-of-sharepoint> with the tenant name of your SharePoint site.

Step 4: Key in the following in the permission request xml:

<PermissionRequestsForApps PermitAppSpecificPolicy=”true”><AppPermissionApplication Scope=”http://sharepoint/content/sitecollection/web” Right=”AbsoluteAuthority” /></PermissionRequestsForApps>

This facilitates a connection between the share-point and the unique ID/secret of the azure application, enabling us to link through client-oriented authentication.

**PLEASE NOTE: The supplied xml is merely a guide. Please ensure to verify the extent of permission needed for your specific situation. The relevant information can be located here.

Once the application has been approved and authenticated by SharePoint, our next step is to form a subscription with the SharePoint tenant. This subscription will enable us to receive notifications every time a file is added or altered in the directory.

Guide to setting up a subscription on SharePoint:

Let’s jazz up the following coding commands a bit.

Use this command when you want to send a POST request to the given URL. The ‘Content-Type’ header specifies that the data sent with the request is in the ‘application/x-www-form-urlencoded’ type. The ‘data’ part includes details like the type of grant, client ID, client secret, and resource.

“`

curl –location -request POST “https://accounts.accesscontrol.windows.net/<tenant-id>/tokens/OAuth/2” –header “Content-Type: application/x-www-form-urlencoded” –data “grant_type=client_credentials&client_id=<client-id>@<tenant-id>&client_secret=<client-secret>&resource=00000003-0000-0ff1-ce00-000000000000/<sharepoint-site-domain>

“`

Use the following command to send a GET request to the given URL. The ‘Authorization’ header is used for authentication purposes.

“`

curl –location –request GET “https://<sharepoint_site>/sites/<tenant-id> /_api/web/lists/getbytitle(<sharepoint-folder>)?$select=Title,Id” –header “Authorization: Bearer <authentication-token>

“`

Here’s how to send a POST request to the given URL. ‘Accept’ and ‘Content-Type’ headers specify the type of data expected in response and sent with the request respectively. The ‘data’ part includes details about the resource, notification URL, expiration date/time, and client state.

“`

curl –location –request POST “https://<sharepoint_site>/sites/<sharepoint_tenant>/_api/web/lists(‘<folder-id>’)/subscriptions” –header “Authorization: Bearer <authentication-token> –header “Accept: application/json;odata=nometadata” –header “Content-Type: application/json” –data “{ “resource”: “https://<sharepoint-site> /sites/<sharepoint_tenant>/_api/web/lists(‘<folder-id>’)”, “notificationUrl”: “<webhook-url>”, “expirationDateTime”: “2099-12-31T16:17:57+00:00”, “clientState”: “A0A354EC-97D4-4D83-9DDB-144077ADB449″ }”

“`

The ‘clientState’ value is essentially a constant value for SharePoint webhooks. The ‘expirationDateTime’ is subjective to your use-case and defines when the webhook should expire.

The <webhook-url> serves as the final destination where you’ll continually receive alerts. In this final destination, you have the ability to compose your unique set of instructions for file transfers. Depending on the activation, it will transfer files from SharePoint to Azure, and send a Kafka message to the specific topic.

This service would be inherently integrated with the cloud deployment, paying attention to SharePoint incidents and directing them to the necessary event-management service.

** IMPORTANT: Please ensure to replace all the placeholder values in the provided code with the correct ones.

Challenges we faced in this configuration:

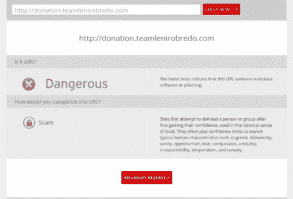

This method comes with a limitation, in which SharePoint is only capable of transmitting alerts to public URLs. The SharePoint webhook service, which is restricted to the organization’s internal network, can only be reached through a proxy service, prohibiting a direct interaction with SharePoint.

This allows us to get notifications and transfer files whenever a fresh or modified file appears in the SharePoint directory we’ve subscribed to. In our situation, we’ll need to make the URL external, which will require various security clearances depending on the organization’s policies.

Thus, we developed a system to routinely retrieve the files.

Method of Batch Processing:

Just like before, the first step in this method is to grant permission to the azure program we’ve made on the SharePoint site, similar to what we did with the web-hooks. After the app gets registered and we obtain the authorized client-id and client-secret, we are able to establish a connection to SharePoint using the Client Context.

In our scenario, we’ve utilized the Office365-REST-Python-Client Python library for our SharePoint operations. We’ve crafted our own scripts which perform the following:

Support for Azure connections is provided through the fsspec library. After having developed and thoroughly checked the service, we launched it on our cloud-based platform. It’s set to operate at intervals of a few hours, ensuring a continuous update of files from SharePoint to the Azure directory.

Our method proved to be effective, enabling us to smoothly roll out the same to production. This allowed for a streamlined selection and processing of files.

Methods for executing a Bulk File Transfer on SharePoint — Reactive and Batch Job, initially made its appearance in the Walmart Global Tech Blog on Medium. The conversation around this topic is actively ongoing, with individuals providing their insights and responses to this story.